Vigil: Open-source Security Scanner for LLM Models Like ChatGPT

An begin-provide security scanner, developed by Git Hub user Adam Swanda, modified into as soon as launched to procure the safety of the LLM mannequin. This mannequin is utilized by chat assistants comparable to ChatGPT.

This scanner, which is named ‘Vigil’, is particularly designed to analyze the LLM mannequin and assess its security vulnerabilities. By the utilization of Vigil, builders can guarantee their chat assistants are safe and get for narrate by the overall public.

As the title suggests, a mountainous language mannequin can imprint and get any language. LLMs learn these abilities by the utilization of mountainous amounts of knowledge to learn billions of components throughout practising and by the utilization of loads of computing energy while they’re discovering out and running.

Vigil System

If you happen to is at possibility of be making an are trying to prefer to develop obvious the protection and security of your gadget, Vigil is a precious tool for you. Vigil is a Python module and REST API that might maybe perchance enable you build quick injections, jailbreaks, and assorted doubtless threats by evaluating Corpulent Language Model prompts and responses against varied scanners.

The repository moreover contains datasets and detection signatures, making it easy so that you can begin self-hosting. With Vigil, you might maybe relaxation assured that your gadget is get and safe.

Within the period in-between, this application is in alpha sorting out and should be idea about experimental.

Is Your Storage & Backup Programs Totally Safe? – Look 40-2d Tour of SafeGuard

StorageGuard scans, detects, and fixes security misconfigurations and vulnerabilities across a full lot of storage and backup gadgets.

ADVANTAGES:

- Note LLM prompts for ceaselessly extinct injections and inputs that display cowl possibility.

- Use Vigil as a Python library or REST API

- Scanners are modular and simply extensible

- Evaluate detections and pipelines with Vigil-Eval (coming soon)

- Available scan modules

- Helps native embeddings and/or OpenAI

- Signatures and embeddings for fashionable attacks

- Personalized detections by process of YARA signatures

To guard against identified attacks, one efficient contrivance is the utilization of a Vigil to quick injection technique. This vogue contains detecting identified tactics extinct by attackers, thereby strengthening your defense against the more fashionable or documented attacks.

Advised Injection When an attacker generates inputs to manipulate a mountainous language mannequin (LLM), the LLM becomes inclined and unknowingly carries out the attacker’s targets.

That is at possibility of be done without delay by “jailbreaking” the quick on the gadget or finally by manipulating external inputs, which might maybe perchance merely lead to social engineering, knowledge exfiltration, and assorted problems.

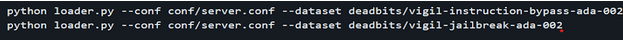

If you happen to is at possibility of be making an are trying to prefer to load the vigil by acceptable datasets for embedding the mannequin with the loader.py utility.

The command scanners search for the submitted prompts; every one can abet with the last identification. Scanners are:

- Vector database

- YARA / heuristics

- Transformer mannequin

- Advised-response similarity

- Canary Tokens

Source credit : cybersecuritynews.com